Part 1 – Part 2 – Part 3 – Part 4 – Part 5 – Part 6 – Part 7

This time I prepare for THE HOLY WAR.

Dice wars: linear VS bell curve.

I’m lost into many other sidetracks but I was now trying to wrap my head around some very basic mathematical problem and I could as well write it down since I stumble on this constantly.

This isn’t about the solo boardgame project and more about my other project on the very complex combat system, but I guess I’ll use this thread to write about all the things (I want to make an analysis about two of the lesser know systems, Chivalry & Sorcery and Ysgarth, next).

The theme here is about the basic mechanics of a to-hit roll.

If we consider on one side the classic D&D d20, and on the other side systems like RuneQuest or Harnmaster, that are based on d100, it doesn’t seem there’s a concrete mathematical difference…

For example if I have my sword skill at 50%, if I roll the d100 under 50 it’s a hit, if I roll over it’s a miss.

The difference from the d20 seems to be just about the granularity: the d20 progresses at 5% steps, in the exact same linear way. So let’s say my TACH0 is 16 and I’m hitting some guy with 5 Armor Class, I roll 1-10 it’s a miss, I roll 11-20 it’s a hit. We got the exact same result of the system used above.

TACH0 improves through leveling up, with 5% increments, in the same way a skill system does the same thing, with whatever step of increment you decide to use.

Are we dealing with mechanically identical systems, at a basic level?

What I was trying to figure out is how this (a linear distribution) compares to a system like GURPS (3d6), using instead a bell curve.

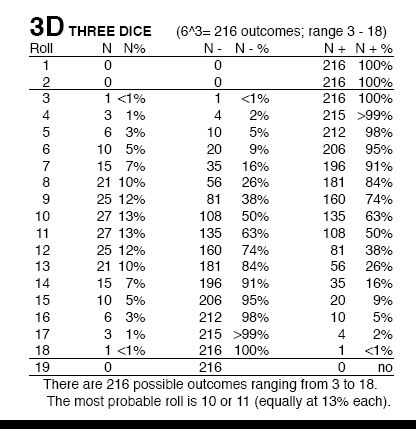

We miss some granularity because the results go only 3-18, so -4 steps available, but also due to the different distribution the most common results are in a narrower range. And basically the ten results between 6-15 are roughly 80% of all possible results. Compared to a d20 where that 80% is mapped across 16 results instead of 10.

What are the merits of a bell curve, then?

Because in the end, for a to-hit roll, the value is still a target number. If you use 3d6 and have to roll 11 or better to hit, then you still have the exact same mathematical probability of the systems used above.

If that’s true then the mechanical difference can only be when we move to consider the progress system:

+1.39 +2.51 +4.9 +6.94 +9.73 +11.57 +12.5 +12.5 and so on in reverse.

It’s essentially what’s being shown in the third column of this chart, from the compiled rules of Traveller:

It translates to: slow to both learn and to master. If you match this with a level system where you gain a +1 bonus every level, the result is that the character will always miss for the first few levels, then rapidly improving, and with the higher levels a progressively slower growth. (but not quite, GURPS makes the cost of the +1 variable on your Dex value…)

I’m trying to distill “meaning” out of all this.

Essentially, when you have a target number then bell or linear curve make no difference. You either hit or miss, it’s a binary result and the distribution doesn’t affect that result. For example, if we want to use a 3d6 bell curve, but want it to map on a d100 system, we can look at that image above, the 5th column. Those are the “steps” available with the 3d6 granularity. So, in a d100 system, you could have a skill at 16%, and that’s exactly the same as a “7” on a 3d6. By rolling 3d6 you continue to have 16% of rolling below. Simply to say that rolling a d100 and succeeding only on a <= 16 is mechanically equivalent as rolling a 3d6 and succeeding on a <= 7.

It affects instead the progress system, or the way that target number “moves”. But with a d100 I could mechanically copy the outcome by designing a progress system around the same values of the one based on the 3d6.

This, oddly enough, was triggered by reading about an OSR system (OSR = Old School Renaissance) called “Dark Dungeons”, that mimics the rules of the basic D&D set.

What was explained in the rules is that we all known the clunkiness of a system like TACH0 and there are always debates about what’s the best system.

Dark Dungeons replaces it by using a system that it claims to be mathematically identical, but that is much simpler to use: instead of a TACH0 value, you have simply an attack bonus. It’s given by your class and Strength bonus. So the to-hit roll is:

d20 + attack bonus + armor value of the enemy.

If the result is 20 or higher, you hit, if not, you miss.

Armor value in this case is descending but it works because you don’t have to subtract it from some arbitrary value, you simply add it to the roll. So if someone is without armor his value is 9, and that will obviously push the result closer to 20.

Ultimately it’s all about ease of use and nothing else, if the system is mathematically identical then there isn’t anything else to consider beside that ease of use.

While looking into this I found this page: http://www.darkshire.net/jhkim/rpg/systemdesign/dice-motive.html

It easily explains there’s a hierarchy of “ease of use”: comparison is easier than addition, which is easier than subtraction, which is easier than multiplication, which is easier than division. (for “comparison” I think it means “less than” or “more than”)

The classic D&D TACH0 is made of: dice + attack bonus, compared to armor value subtracted from a class-dependent target value.

Versus the simplified system in “Dark Dungeons” that is: dice + attack bonus + armor class, compared to target value (fixed at 20).

So: addition, subtraction, comparison VS addition, addition, comparison.

This is simplified in two ways, one addition replaces the subtraction and the comparison is always against a 20, so you don’t have to look it up. While both attack bonus and armor class have to be collected every time in both systems, since they are variable.

From that sidetrack the problem becomes: is there something that a bell curve offers and that can’t be “mapped” on a d100 system (or other linear systems)? Is there something that is intrinsically different?

In general, the distribution of the possible values does matter, for example the typical damage dice. Rolling 1d12 is different compared to rolling 2d6, because you’ll statistically obtain a 12 much, much more frequently by rolling a d12. But even in this case, if you are against a dragon with a million hit points, it doesn’t matter. Because over time those rolls will even out around the middle, and once again the two options become identical.

The general consensus (and math, we aren’t dealing with “opinions” here, the only opinion is the potential lack of insight) is that the bell curve is more “skill based”, and so less random. The distribution of those results is narrower, so the outcome is usually more predictable. Compared to a linear system where instead the outliers are just as frequent. In fact, the bell curve is also often matched with dice pools (Earthdawn being a good example), and especially with exploding dice. It means results are common (same-y), and when they get uncommon they have the potential to become truly extraordinary.

(But I’m not a fan of exploding dice. Even if they happen rarely there’s always this possibility the system goes completely out of control. It simply makes randomness king, eventually. And it is randomness that can happen at very illogical times. I understand the appeal, but I prefer something more structured.)

…And then I thought, what happens if I try to mix up everything?

My current system, at a basic level, has the to-hit roll based on the standard d100. You have a % skill, take a 1d100 and need to roll under your skill.

…What if I replace the d100 with a 2d50 (because being based on computer code I can afford something weird)?

The resulting bell curve is a bit more flat, but we obtain that “realistic” effect of having more predictable results. The whole range that goes from 2 to 23 is cumulatively just 10%. 26 is where the probability is 1%, so matching the linear system, and it grows to 51, where it’s 2%, and then goes back down to 1% at 76.

2-23 = 10.12%

24-75 = 76.88%

76-100 = 13%

There are two direct consequences of this experiment. The first is that I have to redo the criticals, since the range 2-10 is less than 2%. GURPS for criticals uses 3-4 and 17-18 (plus a few quirks, but let’s keep those out), so still less than 2%.

But more importantly, the whole idea of score percentages goes out of the window because your skill value isn’t mapped anymore 1:1 to the probability of that number. If anything, it becomes misleading.

So I though… Let’s go further and make more radical changes. I keep the 2d50, but with score percentages gone I can as well turn it upside down, so you don’t anymore roll UNDER, but over (as it happens above with Dark Dungeons). Let’s say that instead of rolling under a target number you have to roll over a fixed value = 100. So now we have the bell curve of the dice that gives us the baseline number. That’s random chance and it will follow the bell curve, so with the most typical results (80%) in the 25-75 range. To obtain a 100 I then would need some sort of skill value that goes from 75-25 (at minimum), of course.

In the end I would have mapped GURPS onto a 2-100 wider range, slightly flatter “bell”, then reverse it so you have to roll over, and using a fixed target number instead of a variable skill value. It’s not impressive, but it should work fine.

The bell curve is intrinsically counter intuitive. There’s no fast way to extrapolate the exact %. So that’s an aspect that is unfixable. Linear systems are immediately explicit, whereas bell curves are more opaque but simulate better a certain behavior.

At this point I started going a bit too far and wild with ideas. For example I imagined a system that came out of the desire to have a melee combat where every attack carries a momentum to the next. So instead of having a fixed skill value, it might work like first you roll the dice, let’s say you get a 30. So now you need at least 70 to hit 100. And you could have instead a skill “pool” that you can use. So in this case you have a tactical option. You either “push” the attack and spend 70 points (but maybe leaving you out open for a counterattack, if you spend too much on the attack and don’t have much left for defense), or you can instead not spend those points and wait the next turn in the hope for a more favorable roll. It even gets interesting tactically because you could try to “build up” an attack, but it would always be a gamble because if you got hit before you next attack you then would also lose all your momentum with it.

…So I’d have a system where every turn you get a “pool refresh”, maybe based on Dex, or Initiative, or both, and where you carry over the values from the previous turn, in one flow.

This gets too fiddly and with too much bookkeeping even for a computer system. But maybe I can replace those numbers with “tiers”, like every tier is +5 points. That way I can mask away the lack of explicit percentages inherent to the 2d50 (while retaining the benefits).

…But that would gave gone against ANOTHER idea I had. The problem with skill based systems is that the basic statistics become almost irrelevant. Once you get your skill value that’s all that matters, and the stats only give some weak bonuses here and there. The D&D d20 isn’t that different and in fact it’s not skill based but class based. So it’s actually the class that has the biggest impact on what your character can or cannot do.

My idea here was about making the progression of the skill directly based on the stats it is derived from. The basic stats that you generate during character creation would be your natural disposition. So for example a guy with very high Dexterity makes for a potentially amazing archer. But it’s not mandatory, you get good only if you practice enough. On the other side, if you have a poor talent you can practice as much as you want, but you will still struggle a lot compared to the other guy. How this translates to the game? You make the SKILL progress depend on the STATS. So your improvement with that skill, over time, directly depends on the stats that rule the skill. And you could still focus a lot and improve in a skill that doesn’t come naturally to you. You’d just observe a slower progress compared to someone with a natural talent. …But all this goes against the idea of fixed skill tiers to use (because the rate is directly variable in its granularity).

How to fix? Well, I thought of separating the systems. So you have “points” (like Dark Souls’ souls) that you spend to buy skill “tiers”. But the COST in points of the next tier depends on the stats. So a guy with high Dex will have to spend less points to buy a tier in the bow skill, compared to a different guy with lower Dex. And the tiers still end up fixed +5!

And it goes on and on. I shouldn’t waste time writing all it down, but I kind of need to, or face the risk of going again and again through the same ideas.

In any case, if you take it as a whole, all this brainstorming lead to a clunky (but potentially interesting, once massaged into a better form) system that looks VERY similar to the one used in The Riddle of the Steel. Also because once you use a variable system like this one you the realize you don’t really need anymore any target number. You just let the player decide the “intensity” of the attack and see what your opponent does with it. For example a weak attack that in the classic system would be an automatic “miss”, here could still land if the defender doesn’t defend, and still do a little bit of damage (because in my system the to-hit roll has influence on damage done with that attack). But maybe the defender went for that option to prepare a much bigger counterattack by not wasting his own points on that defense for that weak attack and pour them all on his own, stronger attack… It’s tactics bound to a nice random bell curve!

And this brings me to the last point: I still need to do more basic research.

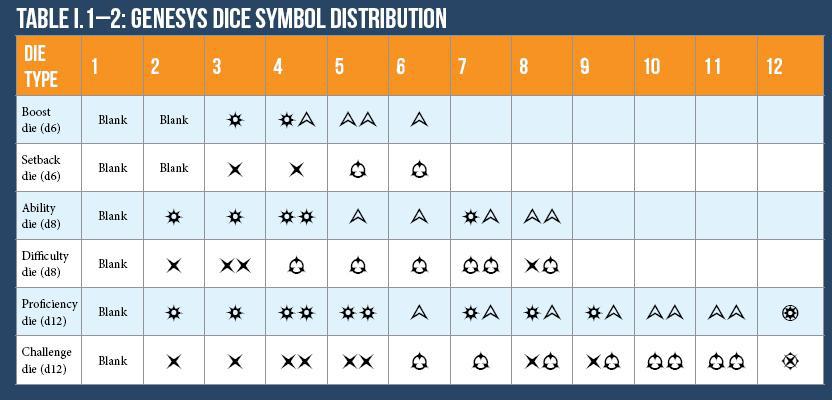

This isn’t a wide argument just about linear systems versus bell curve. There’s a third option that is extremely popular, especially in all the more recent games. And it’s all about pools of dice rolled against a target number. The downside of these systems is that they are VERY OPAQUE, in the sense again that the probability of success isn’t immediately explicit, and calculations are non-trivial. Sometimes the rules manuals hand you a nice table, but quite often you are on your own. But on the other side it’s a system that embraces much more directly both the opposed rolls AND variable successes.

It’s not anymore a binary hit/miss, but how good was the hit and how bad the miss. In some elaborate systems, like those used by Fantasy Flight, you directly have “narrative” side effects:

That, too, can be mapped out of the narrative and in a more mechanical way, but I think it’s still too fiddly.

I certainly need to research more the basic advantages/disadvantages of a dice pool compared to the other two. Only when I know all the technical details I will be able to do some more fancy experiments.

Squaring a circle would be too trivial, so I’ll have to find some way to take this triangle and square it into this circle. Possibly with a very big hammer.

(All the while, reinventing wheels. If it wasn’t already painfully obvious.)