Fascism exists always upstream, with the delusional control of truth values. Only until inevitability reality comes crashing down.

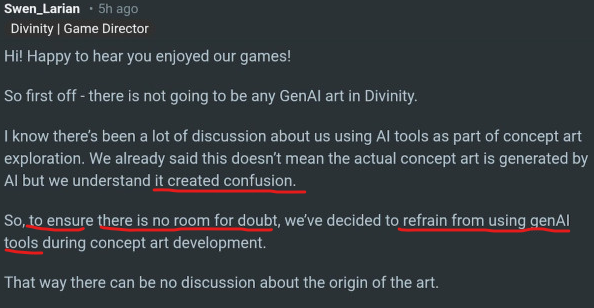

Taking cue from Trump fascist administration, having seen how they can easily manipulate and gaslight a very obvious murder into “self-defense”, Larian decided they could try gaslighting their fans into believing they “listened” to protests and corrected their course, while doubling down on their AI use.

And it’s done through the well trodden path of hiding their tracks.

Well, that’s great, right? There’s absolutely nothing to criticize here.

Problem is the message doesn’t stop there, despite many eyes will glaze over what comes next. And the message proceeds to radically UNDO and contradict everything it claimed in that first part:

Before you can even get to the stage of figuring out if AI can help or not, because, FOR SURE THEFT ALWAYS HELPS, maybe you should figure out what the fuck you’re actually doing and using, right? Just because you “try things” in a dumb, clueless way doesn’t absolve you from responsibilities.

Illegally appropriating the job done by someone else is surely a safe and proven way of “doing things faster.” It just works! I don’t even know why you want a debate on the usefulness of theft. All tech companies have figured out that theft is a successful model, the fastest path to getting rich, especially when governments are now completely corrupt.

So now you are using AI widely “across departments”, having decided that people’s criticism was ONLY ABOUT THE ART. So you figured out that EVERYTHING CAN BE STOLEN, just as long you don’t hurt some artistic sensibilities. Everything else must be up for grabs, right? Steal as fast as possible, loot this miserable world until it’s all gone.

Because that’s the point: everyone is doing the same. Everyone is participating in this looting, so who’s innocent? And if no one’s innocent, how can anyone be guilty?

We have successfully isolated this problem of “artistic origin”, then, having cleansed every other form of theft. So now lets cleanse the artistic side as well, so that WE CAN STEAL WHATEVER WE WANT?

“Without being 100% sure about the origins of the training data.” 100%! Do you know math? 100% is all there is. This is not a tactical game with 110% chance to hit, the d100 only goes 0-99, or 1-100. Statistics! Larian makes RPGs, after all.

“Then it’ll be trained on data we own.”

Oh, nice. So, if this wasn’t just a factually false statement, the only way to make this part “true” would indicate that Larian is now in the AI business and is developing, in-house, their own proprietary LLM model. They would be setting up their own server farm (good luck buying all that RAM required, considering you are trying to set your foot in what’s actually an oligarchy) and hire engineers to build an AI model from scratch. Because, technically, no other way exists.

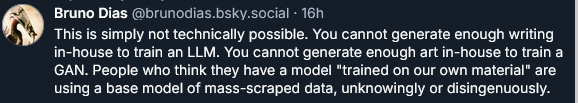

Even assuming this obviously absurd scenario, there’s no way Larian could do that, because when we speak of LLMs (we’re really barely past kindergarten level here), we speak of something that SOLELY operates through “large amounts of data.” Where large is euphemisms for something HUGE. LLMs are heuristics (much like the human brain), they are statistical models. No matter how perfected is the algorithm upstream, the output can only be remotely useful when INCLUDING THE TRAINING DATA.

Unless Larian is developing LLM from scratch, they DON’T OWN THE DATA. Because even if they fed to it all they produced through their own existence, for another HUNDRED YEARS, that would probably still be less than 2% of the amount of data necessary for anything remotely useful. Without even counting how much work and time and resources would require performing and fine-tuning that training. We are speaking of highly specialized work that ALSO has a cost.

It’s not a case that all those proprietary LLMs have been built coupled with “large amount of data” that was factually stolen. Or “legally” stolen because, again, when corruption runs rampant then you can just bend the rules. No company has enough data to feed any LLM. Even if completely stripping their own employees of any right, that still wouldn’t be enough to train an LLM. You need MORE. You need access to everything.

But here we have Larian, the ingenue. They only use a piece of technology they do not understand. They merely want to make a product faster to sell, as it is their capitalistic purpose. Chop, chop. Move on. If they simply, innocently use a tool, they DO NOT SEE anything the LLM has eaten and digested. They do not see the stolen data. They do not see the stolen art. They just FEED THEIR OWN, to this cancer spreading and devouring more. It’s just “their own end” of the bargain. They just use “the prompt.” Once a body has been masticated, it ceases to be a body. It’s blood and gore now.

So, let’s cover ourselves in blood and gore, to participate in this ritualistic sacrifice that is humanity.

Larian saying that they’ll use LLM on “data they own” is an euphemism saying they intend to continue stealing precisely has they’ve been doing. Nothing changes because as long they can persuade their fans that LLM “start where you see them”, with their prompt, and their actual process is occluded and hidden away from awareness, then… everything can be legally stolen because it can’t be tracked. And they intend to continue to participate in that vicious looting.