This is the end, my friend. Like Achamian, we merely get a seat in the front row, to watch. Enjoy the show.

Great advances we’ve made through 2025!! We’ve now discovered that STEALING SOMEONE ELSE’S WORK is easier and faster than doing that work yourself! Such a novel concept!

Did you know that you’re far more productive and can make far more money by stealing from others, rather than making an effort on your own?!

Thieves are indeed geniuses who solved all problems!

Easy profits for everyone!

To be honest, while I followed Bakker’s blog from its very first days, almost 15 years ago, I didn’t take seriously all those “rants” against AI. About how AI would indeed at some point start to produce actual stories and replacing authors. It was obviously something that Bakker felt hitting close to home, being himself a writer, but to me that threat seemed far-fetched, something whose place was in a cautionary science fiction story, whose value was in being abstract and symbolic, the analysis itself. Themes of consciousness and epistemology, but less about a practical, imminent threat. But here we are, Bakker was right even at the fringes of that argument.

And it is somewhat ironical to me that now my own view on the current AI is “conditioned” not by some technical insight, but by the perspective given to me by those studies on consciousness and epistemology.

In the latter part of his activity Bakker was quite proud about having actually published in the scientific space his own work, titled: “On Alien Philosophy”

Given a sufficiently convergent cognitive biology, we might suppose that aliens would likely find themselves perplexed by many of the same kinds of problems that inform our traditional and contemporary philosophical debates.

He was trying to prove that, given the same structures, the problems we faced within philosophy of consciousness would be the same problem you’d “naturally” expect to incur. It’s just another way of saying that these problems were inevitable, logically occurring, giving the circumstances. Given the evolution of the brain. That the problems we see are the problems we should EXPECT to find, given the structure within which we operate.

The problem of today’s generative AI is essentially the same: if you have understood the nature of humanity and of human consciousness, then it would seem simply natural that we’d collectively fall INTO THIS TRAP. Because of our present circumstances. Given our own true nature, our internal build up, we should know that we are vulnerable precisely to this type of pitfall.

Because the “true nature” of generative AI, both in its economic and practical aspects is one of OCCLUSION. Of deception. The greater part of the function of AI is epistemic, becoming the natural “crash space” for human culture as a whole. We can start from the very simple concept of “private property.” We should be aware that, as with language, private property doesn’t truly exist “out in the world.” It is not a physical property, it’s not a law of physics. It’s simply an abstract concept whose value and meaning is fully contained in human culture. We decide and we agree, as a collective, to give private and intellectual properties a meaning. Then have laws to enforce all that. You can argue that animals have some form of the same, that they defend “territory,” because in the end it’s precisely what it is: application of strength. You impose a rule, as long you can impose your will.

But what happens when a powerful group of people decides that laws don’t apply anymore? Or more precisely, don’t apply to them?

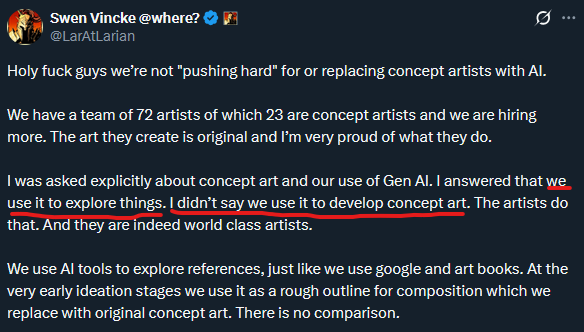

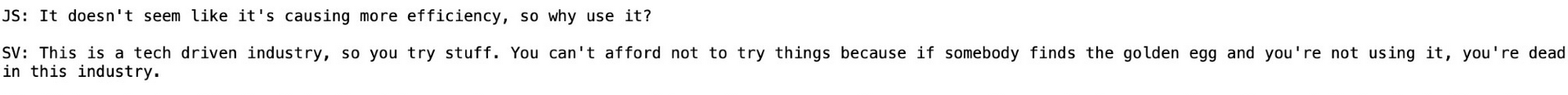

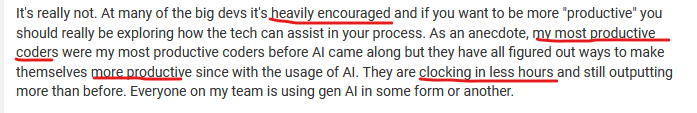

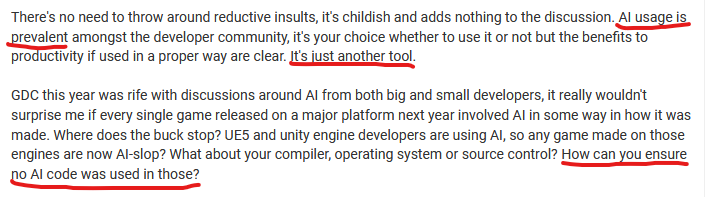

In the early days it was only the artists who opposed AIs, whereas programmers more warmly embraced the “new tools” whose usefulness was more immediately tangible. It’s only normal given that illustrations are felt as more immediately “artistic”, made of that ineffable quality of creativity, whereas programming is seen a more logical, objective field where knowledge weighs more than personal artistry. But what is the difference, in the world? What is the actual truth? What is, truly, creativity? My point here is not about finding a convenient answers to these questions, but to indicate that the nature of occlusion, of NOT KNOWING what an artistic process actually is, is what enables generative AI to “perform” its own “scam.”

Because it’s all one GIANT SCAM we’ve all collectively fallen in.

What generative AIs are doing, from an epistemological point of view, is to HIDE the causal chain that links input to the output. If you decide to copy the work of an artist, to appropriate and sell it as you own, then you’re committing a CRIME. Because the original artist can find out what you just did, and sue you. Because he can then easily prove that he was the source of that original art. And there’s obviously a system of laws that regulates all of this. What generative AIs do in practice is to “mix the sources”, rather than doing a 1 to 1 copy, so that they STEAL FROM MANY, in a way that cannot then be easily backtracked. They “hide the process” of their algorithm so that it’s not possible anymore, in practice, to prove from what input the output was generated. Therefore, in the absence of an evident cause, the machine APPROPRIATES the process: we say that this output is a CREATION of the tool itself. So that it can be SOLD.

Generative AIs are not only “money laundering”, but also laundering the product itself, so that it can be STOLEN from the original authors and SOLD again by thieves.

On this level, artistic illustration and software programming are exactly the same. It’s funny how the programming world is a minefield of different licenses, whose subtle differences can only be extricated by lawyers. Because until today we’ve taken all this very seriously. It’s very complex. So complex that to avoid problems we have concepts like “clean room.” You cannot simply look at some code and then reimplement it for your own application, because you can still be accused of using a solution that belongs to someone else. “Clean room” identifies a process where the programmer explicitly avoids to even LOOK at the code, to prevent any form of bias, so that the final implementation will be fully “original” even if the process itself produces the same (or similar) result.

We’ve always taken licenses very seriously because as a society we’ve always taken intellectual propriety and personal contribution also very seriously.

But what happens when some Evil Corporation takes the gigantic body of work that makes the open source code, and uses it as a training field for its own proprietary “tool”? Since the outputs are “scrambled” we can’t anymore prove that these lines of code have been copied, and so stolen, from here. It’s still THEFT, but it’s hidden, the process occluded. An output without any evident input, so an output that fully belongs to whoever is holding it. A thief who’s laundered the money, so that the money is his.

Human progress exists solely in the NEGATION of intellectual property. Because if every man owned exclusively his own discoveries, then his knowledge would always die with him, and every newborn would have to start from absolute zero. As collective humanity we would have gone nowhere, because our own collective horizon would be bound to that 100 years yardstick. We would be stuck in stagnation. The thievery perpetrated by generative AIs is all the more insulting because we’ve collectively created this large body of work that we call “open-source”, so that it’s available to all humanity. Now we have these techbros thieves who found a “tool” that lets them STEAL this collective resource, and APPROPRIATE IT as if it’s now their own. Literally: rich people looting the community with impunity guaranteed by corrupt governments.

Nothing is “lawful” anymore. The thieves bought the governments and it’s corruption all the way down (and up).

We are all so collectively drunk on the idea of private property to believe that these billionaries can build their own AI factories “with their own money,” as it is their right. Without understanding that this is just another human abstraction with no root in the real world. The worldwide economy is not built into neat, independent packages, it’s all one thing. If “gamers” notice the problem first, because of hardware prices skyrocketing, it’s because hardware is adjacent. But wait long enough and you’ll see how YOU WILL ALL PAY those AI factories with the cost of your groceries. It’s all one system. We are all paying this worldwide displacement of investments. We make these thieves rich by taking a trickle for ourselves, empowering this process until nothing is left. Because these thieves let you also “resell” what they stolen, so that you are perfectly complicit in the process. So that this thievery includes you, by sharing responsibility, but always returning ultimately in their pockets. Wilful complicit thievery.

All those people who are now rejoicing for the great innovations that AIs have brought and will bring in the future, are people who will lose their jobs in the next weeks and months, who will see their families destroyed, who will pay with their health. Who are welcoming in their houses those same thieves who will loot and burn them to the ground. The trojan horse that is AI will destroy human culture and wealth at their very foundations, in a way that won’t be recoverable. We are indeed about to witness the apocalypse of man. Or rather, the ultimate celebration of human stupidity.

Imagine a very simple rule to regulate generative AIs: everything that comes from the community needs to return to the community. If something is the product of generative AIs, then it cannot be appropriated and owned. It can’t be sold. It can’t be used for profit.

One simple rule, and the whole field would immediately self-regulate, because it would immediately exclude the thieves. And stealing is the whole point here. Rich people stealing from all of us. But in reality those few thieves have enslaved the world, and we are all complicit in own own, now inevitable, collective end.

The view from here is crystal clear. Enjoy the show.